Richard Sproat

Computational Linguist // Research Scientist

〒105-6415、東京都港区虎ノ門1丁目17‐1、虎ノ門ヒルズビジネスタワー15階

Toranomon Hills Business Tower 15F, 1-17-1 Toranomon, Minato-ku, Tokyo 105-6415, JAPAN

I am a computational linguist (which means that I have some things in common with grapefruit).

I am a Research Scientist at Sakana.ai in Tokyo. My work at Sakana focuses on natural language processing, image processing, and AI.

Prior to that I was a Research Scientist at Google, formerly in New York, then in Tokyo.

News

Andrew Robinson's review of our book our book Tools of the Scribe: How Writing Systems, Technology, and Human Factors Interact to Affect the Act of Writing, is now out in Nature.Some Current Research Interests

At Sakana.ai, I work on various projects relating to Natural Language Processing and Artificial Intelligence. Some recent and/or ongoing projects:

- Reasoning-based evaluation of translation (paper, code).

- Generation of descriptions of Japanese Kamon (family crests) (code, data, paper forthcoming).

- Constructed languages using LLMs (paper, code).

- RePo: Language Models with Context Re-Positioning (paper, code, blog and live demo).

- Modeling language change.

- One ongoing project in this area is on the relevance of heraldry to writing systems (work done in association with the VIEWS Project at Cambridge University: slides; talk).

- I also presented at a conference on rongorongo, "Advancement in the Studies of the Easter Island Script" in Mexico City, November 2026: slides; talk.

At Google (2012-2024)

At Google I was mostly working on text normalization, where my former group had been developing various machine learning approaches to the problem of normalizing non-standard words in text and I have been particularly interested in the promise (and limitations) of approaches using recurrent neural nets. In September 2019, I moved to Google Tokyo, and worked on end-to-end speech understanding.

I continued to maintain some "side-bar interests" including computational models of the early evolution of writing, the statistical properties of non-linguistic symbol systems, and collaborating on a translation of Wolfgang von Kempelen's Mechanismus der menschlichen Sprache, which was published in 2017.

At the Oregon Health & Science University (2009-2012)

Prior to joining Google I was involved in a number of projects:

- An NSF-funded project on text-to-scene conversion: NSF: "RI: Medium: Collaborative Research: From Text to Pictures". In our piece of the project we are looking at using Amazon's Mechanical Turk to fill in semantic information into a frame-like ontology (e.g. parts of objects, how objects are typically used, frame information about verbs, etc.)

- An NIH (R01) funded project on "Computational characterization of language use in autism spectrum disorder".

- An NIH (K25) funded project on "NLP for Augmentative and Alternative Communication in Adults". The plan is to develop systems that can predict a set of plausible responses for an AAC-system user given the discourse context. In Spring of 2010, I taught (with Brian Roark) a Seminar on Speech and Language Processing for Augmentative and Alternative Communication.

- An NSF-funded project on Corpora of Non-Linguistic Symbol Systems.

- A CIA-funded project on text-normalization and genre adaptation for social media texts.

- OpenGRM: I ws involved in open-sourcing various tools for finite-state and other language processing, including some of the Google tools for grammar development.

At the University of Illinois, Urbana-Champaign (2003-2009)

- Multilingual spoken term detection: I was team leader of the 2008 Johns Hopkins Center for Language and Speech Processing workshop on this topic.

- Language modeling for colloquial Arabic speech recognition.

- Named entity detection and transliteration for multiple languages.

- Prediction of prosody from text for affective speech synthesis.

- Acoustic and pronunciation modeling for accented standard Chinese. (Follow-up on work done at the Hopkins 2004 workshop.)

- The relation to layout to phonological awareness in scripts of South Asia.

- Automated Methods for Second-Language Fluency Assessment.

- Interpretation of location descriptions for botanical/zoological specimen labels.

Once again, I have long been interested in writing systems; see, for example, some work I was doing on approximate string matching in the Easter Island rongorongo script. I also ran (with Jerry Packard) a reading group centered around Hannas' controversial thesis relating Asia's supposed technological creativity gap, with the Chinese writing system.

At AT&T Labs—Research and Bell Laboratories (1985-2003)

Before joining the faculty at UIUC I worked in the Information Systems and Analysis Research Department headed by Ken Church at AT&T Labs—Research, where I worked on Speech and Text Data Mining: extracting potentially useful information from large speech or text databases using a combination of speech/NLP technology and data mining techniques.

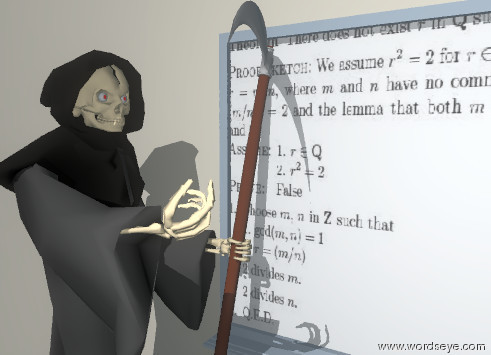

Before joining Ken's department I worked in the Human/Computer Interaction Research Department headed by Candy Kamm. My most recent project in that department was WordsEye, an automatic text-to-scene conversion system. The WordsEye technology is now being developed at WordsEye.com. WordsEye was particularly good for creating surrealistic images that I can easily conceive of but are well beyond my artistic ability to execute. And we were doing this 20 years before the "AI revolution"! All of the following images were generated from text descriptions of the scene:

|

|

|

|

Prior to joining AT&T Labs in 1999 I worked on Text-to-Speech Synthesis at Bell Labs, Lucent Technologies. Among other things, I was responsible for the multilingual text processing module of the Bell Labs Multilingual TTS System.